Your Amazon scraper runs fine for a few hours, pulling product data and pricing like a champ. Then everything crashes. CAPTCHA challenges everywhere, IP bans, and error messages that make you want to throw your laptop out the window. We've been there too many times.

Amazon ramped up their anti-bot detection big time in 2026. Their AWS WAF protection got smarter, tracking browser fingerprints, request patterns, and even how fast you scroll.

Basic Amazon scraping methods that worked last year? Completely useless now. FBA sellers and ecommerce businesses are losing thousands in research time because their scrapers keep getting shut down.

We tested over 40 different bypass techniques to crack Amazon's protection system and found exactly what works.

Why Basic Amazon Scrapers Fail

Most Amazon FBA sellers and ecommerce businesses start with simple scraping tools that work great until they don't. You've probably been there yourself.

Your scraper runs smoothly for a few hours, pulling Amazon product data and competitor pricing. Then boom CAPTCHA challenges start popping up everywhere.

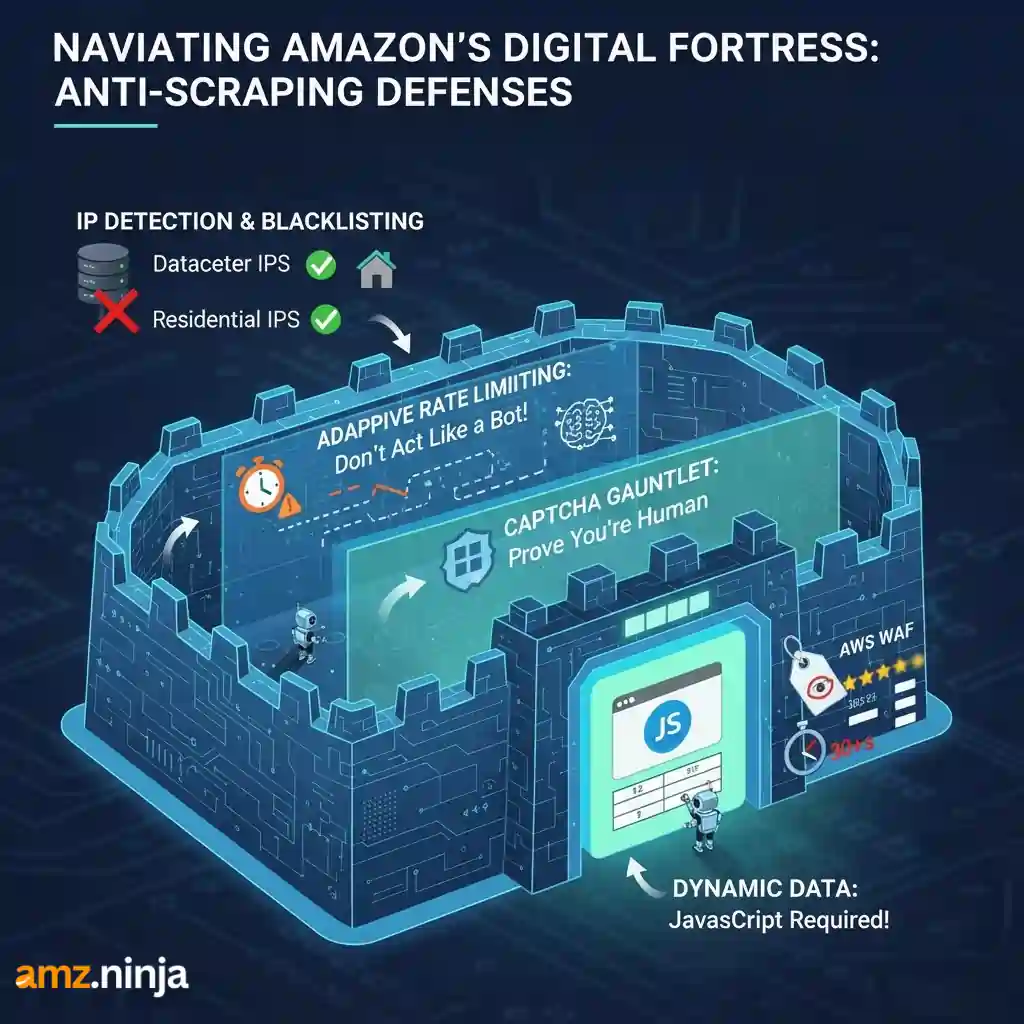

Amazon's anti-bot detection system isn't messing around. They've built multiple layers of defense that analyze your IP reputation, request patterns, browser fingerprints, and even how fast you scroll through pages. When any of these signals look suspicious, Amazon shuts you down hard.

At AMZ.Ninja, we learned this lesson the expensive way. Our early scraping attempts got blocked constantly, costing us valuable time and money.

That's when we realized we needed to think differently about bypassing Amazon CAPTCHA and beating their sophisticated defenses.

Why Amazon Blocks Scrapers and How Their Defense System Works

Amazon employs sophisticated multi layer protection that evolves constantly. Understanding these systems is crucial before you even write a single line of code.

Amazon tracks every request hitting their servers. When datacenter IPs make repetitive calls especially at inhuman speeds, their systems flag them instantly.

We tested this at AMZ.Ninja with standard datacenter proxies and saw failure rates exceeding 90 percent within the first 200 requests.

Residential proxies work better because they come from real ISP assigned addresses that look like genuine shoppers browsing from home. These rotate automatically, distributing your scraping load across millions of legitimate IP addresses.

Amazon doesn't just count requests per minute. Their algorithms analyze behavioral patterns like request timing consistency, page view sequences, and session duration.

If you hit product pages too fast or skip typical user actions like viewing images or scrolling, you trigger their alarms.

Text based puzzles, image selections, AWS WAF challenges. Amazon deploys multiple CAPTCHA types strategically.

Each CAPTCHA that appears costs precious time, often 30 plus seconds of dead scraper downtime. Worse, solving them incorrectly raises more red flags on your account or IP.

Product reviews, availability status, dynamic pricing, these critical data points load via Ajax requests after the initial page renders.

Basic HTTP libraries like Python Requests miss this entirely because they can't execute JavaScript. You get incomplete data that looks correct until you realize half the information is missing.

Bypassing Amazon CAPTCHA Without Getting Permanently Banned

CAPTCHA bypass is the biggest pain point we hear about from sellers at AMZ.Ninja. Here's how we approach it using proven methods that scale.

Method One: Stealth Browser Automation

SeleniumBase and similar stealth tools modify browser fingerprints to avoid detection. They tweak automation markers that basic Selenium exposes, making your scraper look more human.

We use this code structure for testing new products before scaling:

from seleniumbase import Driver

driver = Driver(uc=True)

driver.get("https://www.amazon.com/dp/B096N2MV3H")

driver.save_screenshot("product_page.png")

driver.quit()This approach dramatically reduces CAPTCHA encounter rates compared to vanilla Selenium. However, it's resource intensive, consuming significant CPU and memory. At scale, running hundreds of stealth browsers simultaneously becomes expensive and slow.

Method Two: Integrating CAPTCHA Solving Services

Services like 2Captcha or Anti Captcha offer API based automatic solving. You detect the CAPTCHA, send the challenge to their service, receive the solution, and submit it back to Amazon.

if 'captcha' in response.text:

captcha_solution = captcha_solver.solve(response.text)

submit_captcha_solution(captcha_solution)Costs run about 1 to 3 dollars per 1000 CAPTCHAs. Success rates vary between 70 to 90 percent depending on CAPTCHA complexity. For high volume scraping, these costs and latency issues add up quickly.

Method Three: Decodo Web Scraping API for Complete Automation

This is where Decodo changes everything for serious sellers. Instead of managing stealth browsers, proxy rotation, and CAPTCHA solving yourself, Decodo handles it automatically with 99 percent plus success rates.

import requests

payload = {

'url': 'https://www.amazon.com/dp/B096N2MV3H',

'render_js': True,

'premium_proxy': True,

'auto_captcha': True

}

response = requests.post(

'https://api.decodo.com/scrape',

json=payload,

auth=('your_api_key', '')

)

data = response.json()Decodo automatically retries with different IPs if CAPTCHAs appear, uses built in residential proxies from their 65 million plus IP pool, and charges flat rates with no per CAPTCHA fees.

Amazon Scraping with Login Sessions: Accessing Protected Data

Some valuable Amazon data sits behind authentication walls: order history, seller only pages, personalized recommendations. Getting this data requires maintaining session state across requests.

Manual Session Based Scraping

You need to replicate the login flow, extract form tokens, handle cookies, and maintain IP consistency:

import requests

from bs4 import BeautifulSoup

session = requests.Session()

login_page = session.get('https://www.amazon.com/ap/signin')

soup = BeautifulSoup(login_page.content, 'lxml')

form_data = {

'email': 'your_email@example.com',

'password': 'your_password',

'appActionToken': soup.find('input', {'name': 'appActionToken'})['value']

}

login_response = session.post(

'https://www.amazon.com/ap/signin',

data=form_data

)

if 'Your Account' in login_response.text:

protected_data = session.get('https://www.amazon.com/your-orders')The challenge? CAPTCHA during login, two factor authentication requirements, and session expiration management. Amazon also ties sessions to IP addresses, so rotating proxies breaks authentication.

Sticky Session Proxies for Authentication

Sticky sessions maintain the same IP address across multiple requests. This lets you log in once and keep scraping protected pages without re authenticating.

Decodo supports sticky sessions that persist your authenticated state while handling cookie management automatically. This eliminates the headache of manually tracking session cookies and dealing with expired authentications.

Handling Dynamic Ajax Content and JavaScript Rendered Data

When we first built AMZ.Ninja's product research database, we discovered a painful truth: basic scrapers miss half the data Amazon displays to users. Product reviews, stock levels, pricing updates all load asynchronously via Ajax after the page renders.

Intercepting Ajax Endpoints Directly

Advanced scrapers can bypass browser rendering by calling Amazon's hidden Ajax endpoints directly:

ajax_url = 'https://www.amazon.com/hz/reviews-render/ajax/reviews/get/'

params = {

'asin': 'B096N2MV3H',

'pageNumber': 1,

'pageSize': 10

}

response = requests.get(ajax_url, params=params, headers=headers)

reviews_data = response.json()This method is faster and uses fewer resources than rendering full pages. However, Amazon frequently changes these endpoints, making your scraper brittle. You also need authentication tokens embedded in the initial page HTML.

Headless Browser Rendering

Tools like Selenium and Playwright render full JavaScript to capture all dynamic content:

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome(options=options)

driver.get('https://www.amazon.com/product-reviews/B096N2MV3H')

wait = WebDriverWait(driver, 10)

reviews = wait.until(

EC.presence_of_all_elements_located((By.CLASS_NAME, 'review'))

)This captures everything users see but runs 5 to 10 times slower than HTTP requests. It also consumes massive memory and CPU resources at scale.

Decodo JavaScript Rendering in the Cloud

Decodo executes JavaScript rendering on their infrastructure, not your servers:

payload = {

'url': 'https://www.amazon.com/product-reviews/B096N2MV3H',

'render_js': True,

'wait_for_selector': '.review',

'premium_proxy': True

}

response = requests.post(

'https://api.decodo.com/scrape',

json=payload,

auth=('your_api_key', '')

)

reviews = response.json()['reviews']You get fully rendered content with all Ajax data loaded, no local browser overhead, and stealth rendering that avoids automation detection.

AMZ.Ninja's Final Recommendations for Amazon Sellers

Building your Amazon scraper takes work, but running it without constant blocks takes real skill. We went from 90 percent failure rates to consistent data extraction once we combined stealth browsers with residential proxies and smart rate limiting.

Your scraper needs to handle JavaScript rendering, rotate IPs properly, and solve CAPTCHAs automatically. Testing different proxy providers cost us thousands before finding what actually works at scale.

Success rates above 95 percent are totally possible when you nail the setup. Are you still losing money to failed scraping attempts?

Also Read: